Pricing and Structure

If you’re evaluating ChatGPT vs. Gemini vs. Claude: Which is best for research?, pricing is mostly about: (1) solo plans for individuals, (2) team pricing for small orgs, and (3) enterprise options. Below is a quick, current snapshot (USD; regional taxes may apply).

Solo/creator plans

- ChatGPT Plus — $20/month. Highest-value individual tier for most users; adds bigger limits and advanced features over Free. OpenAI

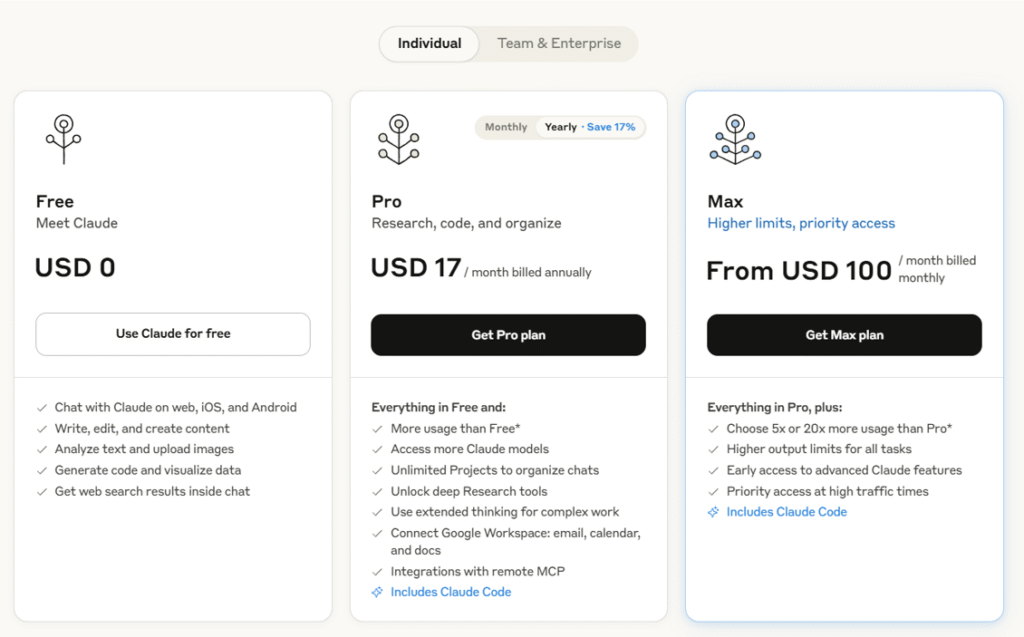

- Claude Pro — $20/month (or ~$17/month if billed annually). Pro capacity and priority access; annual discount shown on Anthropic’s pricing page.

- Gemini (Google AI Pro) — $19.99/month. Includes Gemini Advanced access plus 2TB storage and AI features across Google apps.

Power/“max” individual tiers

- ChatGPT Pro — $200/month. Unlocks the highest access levels (e.g., GPT-5 Pro and expanded limits). OpenAI

- Claude Max — $100 or $200/month (Max 5x / Max 20x). Higher usage capacity versions of Claude for heavy users. support.anthropic.com

Small teams

- ChatGPT Team — $25 per user/month (annual) or $30 per user/month (monthly). Adds workspace admin controls and higher limits. OpenAIOpenAI Help Center

- Claude Team — $30 per user/month. Designed for collaborative use with increased usage versus Pro. support.anthropic.com

- Gemini with Workspace — AI now included in Business/Enterprise editions. Example given by Google: a Business Standard customer that used to pay $32/user/month (plan + Gemini add-on) now pays $14/user/month with AI included. (Check your edition and region.) Google Workspace

Enterprise

- ChatGPT Enterprise, Gemini Enterprise, and Claude Enterprise are custom-priced with security/compliance, higher limits, and admin controls. (Contact sales pages; pricing varies.) OpenAI+1Google Workspaceanthropic.com

What this means for most buyers

- Best low-cost entry: Gemini (Google AI Pro) at $19.99 vs ChatGPT Plus/Claude Pro at $20. Pick based on features you need (browsing/reporting style, file handling, ecosystem).

- Best value for small teams: ChatGPT Team at $25/user/mo (annual) is competitively priced and widely adopted; Claude Team is a close alternative at $30/user/mo; Workspace pricing with AI included can be compelling if you already live in Google’s suite. OpenAI Help Centersupport.anthropic.comGoogle Workspace

- Heavy individual research: If you routinely hit caps, ChatGPT Pro ($200) or Claude Max ($100–$200) offer the highest ceilings. OpenAIsupport.anthropic.com

Where to add images in this section

- Mini pricing table graphic right under the H2 (one row per tool; plans & prices).

- Screenshots of the official pricing pages for ChatGPT, Google One AI Pro, and Claude with small captions like “Captured Aug 2025”—helps trust & CTR. OpenAIGoogle Oneanthropic.com

- Callout badges next to recommendations (e.g., “Best for teams”, “Best budget”) to make skimming easier.

Live Web Research & Retrieval

ChatGPT — ChatGPT Search

- Searches the web directly from chat and returns answers with links to sources. Good for timely topics (news, prices, scores). OpenAI

Gemini — Deep Research

- Agentic mode that can automatically browse hundreds of sites, reason through findings, and deliver a structured report—especially useful for broad topics and literature scans. Now powered by Gemini 2.5. Gemini

Claude — Web search

- Native, citation-forward browsing. Claude generates targeted queries, fetches results, and includes citations in its responses; web search is available across plans and via API. anthropic.com+1Anthropic

Takeaway: For fully automated “research sprints,” Gemini’s Deep Research shines. For cautious, source-backed summaries, Claude’s web search is excellent. ChatGPT is a strong all-rounder for quick answers with links.

Citation Quality & Traceability

- Claude: web-search answers include citations by default. Anthropic

- Gemini: Deep Research produces multi-page reports with source references within the output. Gemini

- ChatGPT: ChatGPT Search supplies links/sources in its results. OpenAI

Takeaway: All three cite; Claude is the most consistently citation-forward in standard chat, while Gemini excels in large, source-rich reports.

Accuracy & Hallucination Resistance

When people search “ChatGPT vs. Gemini vs. Claude: Which is the best research tool?”, they’re really asking: Which one gives the fewest wrong facts—and shows its work? All three still hallucinate at times, but they ship different safeguards and workflows to keep errors in check. OpenAI

ChatGPT (OpenAI)

- What helps: Newer GPT-4.1–family models focus on reliability in long context and instruction-following; OpenAI also publishes safety evals you can check for your use cases. OpenAI+1

- Reality check: Independent reporting and papers in 2025 note that some reasoning variants (e.g., o3, o4-mini) can hallucinate more than prior models—so enable browsing/grounding and ask for citations when accuracy matters. TechCruncharXiv

Gemini (Google)

- What helps: Deep Research plans multi-step web checks and returns a report with linked sources; Google’s double-check (“Search Google”) encourages verifying factual claims right in the UI. blog.google+1Google Workspace

- Reality check: The double-check feature isn’t universal in every region/session, and quality can vary by topic—so skim the sources list before you trust an answer. Google Help

Claude (Anthropic)

- What helps: Constitutional AI training aims to make Claude refuse to guess and defer when uncertain; Anthropic also publishes guidance to reduce hallucinations with prompting and grounding. AnthropicarXivAnthropic

- Reality check: Even with these guardrails, Claude can still be confidently wrong—use explicit instructions like “cite and quote exact lines from sources.” (General best practice; see Anthropic docs.) Anthropic

Quick, practical ways to cut errors (works for all three)

- Force grounding: “Browse the web and provide 3+ primary sources. Link each claim.”

- Ask for uncertainty: “If unsure, say so and list what you’d need to verify.”

- Request quotes + DOIs: “Quote exact lines for key claims and include DOIs/URLs.”

- Constrain the domain & time: “Only use peer-reviewed sources since 2023; exclude blogs.”

Verdict for this category

- Gemini: Strong for auditable multi-step web checks (Deep Research with linked citations). blog.googleGoogle Workspace

- Claude: Good defaults for not guessing and for citation-style answering when prompted. AnthropicAnthropic

- ChatGPT: Broadly reliable with the latest GPT-4.1 family, but verify claims—especially if you switch to experimental “reasoning” modes. OpenAITechCrunch

Synthesis & Literature Review Quality

When readers search “ChatGPT vs. Gemini vs. Claude: Which is the best research tool?”, they’re asking which system can ingest lots of material, connect the dots, and surface defensible takeaways with sources. Here’s how each one handles multi-source synthesis and literature-review style work.

ChatGPT (OpenAI)

- How it synthesizes: ChatGPT’s built-in web search returns answers with linked sources, which you can expand into summary tables or structured outlines. OpenAIOpenAI Help Center

- Working with many papers: Projects let you upload and persist files (PDFs, spreadsheets, images) so the model can synthesize across them—useful for “related work” sections and evidence tables. OpenAI has been increasing file limits for Pro/paid plans. OpenAI Help Center+1

- Long context for bigger reviews: OpenAI’s newer models (e.g., GPT-4.1) advertise very large context windows (up to ~1M tokens in the API), which helps keep more of your corpus “in mind” during synthesis. OpenAI

Bottom line: Strong day-to-day syntheses with easy linking; best when you combine web results + uploaded PDFs inside a Project for continuity across drafts. OpenAI Help Center

Gemini (Google) — Deep Research

- How it synthesizes: Deep Research breaks a topic into steps, searches the web, and returns a consolidated report with key findings and source links—ideal for literature-review style write-ups you can audit. blog.googleGemini

- Working with many papers: Google documents long-context models (1M-token class) designed to recall details from multiple long documents, which directly benefits cross-paper synthesis. Google AI for DevelopersarXiv

Bottom line: Best fit when you want step-wise, auditable synthesis that shows how claims map to sources, especially across big document sets. blog.google

Claude (Anthropic)

- How it synthesizes: Claude’s web search feature pulls fresh material and automatically cites sources in its answers, which encourages verifiable summaries and comparative matrices. Anthropic

- Working with many papers: Recent updates have expanded context window capacity (industry coverage reports 1M-token-class options), improving Claude’s ability to summarize large corpora without heavy chunking. The Verge

Bottom line: Great when you want citation-forward, high-recall summaries and conservative phrasing that avoids over-claiming while still pulling threads across many documents. Anthropic

How to get higher-quality literature reviews (works in all three)

- Force structure: “Create a 4-column evidence table (Study, Year, Method, Key Finding) + a 5-bullet ‘What the field agrees on / disagrees on’ summary.”

- Demand auditable claims: “For every claim, add a bracketed [#] linked to the exact URL/DOI and quote the verifying line.”

- Scope precisely: “Prioritize RCTs and meta-analyses since 2023; exclude opinion pieces.”

- Iterate: “List gaps/limitations and 3 follow-up searches you’d run next.”

Verdict for this category

- Deep, step-wise synthesis with a clear sources panel: Gemini (Deep Research). blog.google

- Citation-rich narrative summaries with conservative tone: Claude. Anthropic

- Flexible everyday syntheses that blend web + your PDFs inside one workspace: ChatGPT (especially with Projects and large-context models). OpenAI Help CenterOpenAI

Long-Context Handling & File Uploads

For “ChatGPT vs. Gemini vs. Claude: Which is the best research tool?”, long-context capacity and file handling determine how big a reading list you can ingest at once—and how painlessly you can synthesize it. Below is what matters now, with verified limits and gotchas.

ChatGPT (OpenAI)

- Context window (API): OpenAI’s GPT-4.1 supports up to ~1M tokens in the API—useful for very large corpora and multi-PDF reviews. OpenAIOpenAI Platform

- Projects & persistence: Projects let you keep chats, instructions, and files together so the model can reference them across sessions. (It’s designed for bigger, ongoing work.) OpenAI Help Center

- File limits (ChatGPT app): 512 MB per file; document uploads are additionally capped at ~2M tokens per file (spreadsheets have separate limits). Plus: up to 20 files per Project; Pro/Team/Edu/Enterprise: up to 40; 10 files per upload batch. OpenAI Help Center+2OpenAI Help Center+2

Takeaway: If you’re comfortable orchestrating your review in Projects and occasionally pushing heavy synthesis to the API, ChatGPT handles very large contexts and sizeable files with straightforward, well-documented limits. OpenAIOpenAI Help Center

Gemini (Google)

- Context window: In the consumer app (Google AI Pro / Gemini in Pro), Google advertises ~1M tokens of context; in developer stacks, Gemini 1.5/2.5 Pro can reach 2M tokens (Vertex AI / Gemini API). Gemini+1Google Developers BlogGoogle Cloud

- Deep Research + files: Deep Research can search the web and synthesise while also letting you upload your own files into the investigation. Gemini

- File limits (Gemini Apps): Up to 10 files per prompt; most files up to 100 MB, videos up to 2 GB. You can also attach a GitHub repo (up to 5,000 files, 100 MB total) to a chat. Google Help+1

Takeaway: If you want huge context without touching code, Gemini in Pro is strong; if you build tooling or need maximal headroom, Vertex/Gemini API unlocks the 2M-token tier. Google Cloud

Claude (Anthropic)

- Context window: Claude Sonnet 4 now offers an up-to-1M-token context via API (currently behind a beta flag); earlier public configs were smaller. Check your interface/tier. Anthropicanthropic.com

- File limits (Claude.ai chat): Typically 30 MB per file and up to 20 files per chat in the web app; API storage allows larger files (up to 500 MB) and org-level quotas. Anthropic Help CenterAnthropic

Takeaway: For long-document reviews, Claude via API now competes on raw context; the chat UI favors many medium-sized uploads with conservative, citation-friendly synthesis. Anthropic

Multimodal Research (PDFs, Tables, Images)

multimodal skills matter: can the tool read PDFs, extract tables, and interpret figures/images reliably? Here’s what each one offers today.

ChatGPT (OpenAI)

- PDFs & tables: Reads PDFs and can interpret embedded visuals (charts, diagrams) in PDFs—especially on Enterprise, which explicitly supports visual understanding inside PDFs. OpenAI Help Center

- Images & screenshots: Vision models can analyze images, reason over charts and tables in pictures, and combine this with web search or Python-based Data Analysis for deeper work (CSV/Excel). OpenAI+1

- File handling (quick recap): Upload common docs/spreadsheets; clear limits and project-based organization help manage large literature sets. OpenAI Help Center+2OpenAI Help Center+2

Use it when: You need a single workspace to mix PDFs + spreadsheets + images, and want to chart or clean data alongside document reading.

Gemini (Google)

- PDFs & images: Gemini Apps let you upload multiple files (Docs/PDFs/Word, images, videos) in one prompt; Advanced tiers provide longer context for big PDFs. Google HelpGemini

- Deep Research + files: You can add your own files into Deep Research so the system cross-references uploads with the open web and returns a sources-linked report. Gemini

- Multimodal models: Current Gemini models accept images, audio, video, and text as inputs, designed for complex multimodal reasoning (dev/API paths). Google AI for Developers

Use it when: You want auditable, step-wise synthesis that blends your PDFs/images with web findings in one linked write-up.

Claude (Anthropic)

- PDFs & tables: Claude can process PDFs—including charts and tables—and return structured summaries or extracted tables. Anthropic

- Images: The Vision capability handles screenshots, diagrams, and photos with guidance on best practices and limits. Anthropic

- Developer workflow: A Files API helps stage recurring documents/datasets for repeated analyses (useful for iterative reviews). File-size and per-chat file-count limits apply in the web app. AnthropicAnthropic Help Center

Use it when: You prefer citation-forward, conservative summaries from many uploads, and you may automate pipelines via API.

Practical prompting tips (works in all three)

- “Extract all tables in this PDF. Return a CSV for each and note any missing headers/units.”

- “For each figure, describe the axes, sample size, and main effect. If unclear, say so.”

- “Create a 4-column evidence table (Study, Year, Method, Key Finding) and link each claim to the exact page/figure.”

- “If any pages or images couldn’t be read/OCR’d, list them explicitly.”

Verdict for this category

- Best for blended multimodal + analysis workflows: ChatGPT (vision + Data Analysis + Projects in one place). OpenAIOpenAI Help Center

- Best for auditability across uploads + web: Gemini (Deep Research) with file attachments and a consolidated sources view. Gemini

- Best for careful PDF/table summarization with citations & API pipelines: Claude (PDF/table understanding + Files API). Anthropic+1

Data Analysis & Coding for Research

A big differentiator is whether the tool can run code, analyse real datasets (CSV/Excel/JSON/PDF), and produce charts/tables—all with a workflow you can repeat and audit.

ChatGPT (OpenAI)

- Built-in Python execution (“Data Analysis”). Inside ChatGPT you can upload files, and the model will write & run Python to clean data, run stats, and generate charts. You can see the code (“View analysis”), interact with tables/charts, and download figures—no separate notebook required. OpenAIOpenAI Help Center

- How it works (under the hood). ChatGPT uses pandas for data wrangling and Matplotlib for plots, executing in a secure sandbox (no outbound network). This keeps analyses reproducible and safer for sensitive files. OpenAI Help Center

Use ChatGPT when: you want a no-setup environment to crunch a CSV/Excel quickly, show the exact code used, and export clean visuals—all inside one chat. OpenAI

Gemini (Google)

- Notebook-native assistance. Gemini in Colab Enterprise helps you write, explain, and fix Python directly in notebooks—great if you already live in Colab/Jupyter. Google Cloud

- Warehouse-side analytics. Gemini in BigQuery can generate SQL and Python, explain queries, and surface insights in BigQuery Studio—handy for medium/large datasets you don’t want to pull out of the warehouse. Google Cloud+1

Use Gemini when: your workflow is notebook-first or data-warehouse-first—you’ll keep code and data where they already live while getting AI help for SQL/Python authoring. Google Cloud

Claude (Anthropic)

- Runs code in-app (JS) + via API (Python). In Claude.ai, the Analysis tool can run JavaScript in a built-in sandbox for data tasks (feature preview). For programmatic pipelines, the Code Execution tool (API, beta) lets Claude run Python in a secure sandbox to analyze files and create visualizations. You can also extend Claude with tool use for custom workflows. anthropic.comAnthropic+1

Use Claude when: you want conservative, step-by-step analysis and plan to embed code execution in a repeatable API/agent pipeline. Anthropic

Practical prompts that work (all three)

- “Load this CSV, fix missing values, and produce a summary table + histogram. Share the code.”

- “Create a reproducible pipeline: data load → clean → model (logistic regression) → metrics (AUC, confusion matrix) → chart.”

- “Export a tidy CSV of the final table and a PNG of the chart.”

- “If any step fails, show the error and the revised code you used to fix it.”

Verdict for this category

- Fast, no-setup analysis with charts & visible Python: ChatGPT (Data Analysis is the most turnkey for non-coders). OpenAIOpenAI Help Center

- Best inside notebooks or BigQuery: Gemini (strong Colab/BigQuery integration for Python/SQL at source). Google Cloud+1

- Best for agentic/API pipelines with code execution: Claude (JS in app; Python sandbox via API beta + tool use). anthropic.comAnthropic

Structured Outputs & Exports

This section focuses on how cleanly each tool can return structured data (JSON/CSV/tables) and export your work to docs, sheets, or files you can reuse.

ChatGPT (OpenAI)

- Schema-true JSON (API). OpenAI’s Structured Outputs lets you enforce a JSON Schema so responses must match the shape you define (great for evidence tables, study metadata, etc.). OpenAI Platform+1

- Downloadables in the app. With Data Analysis, ChatGPT can generate and let you download CSVs/plots right from the chat (you can also view the exact Python it ran for reproducibility). OpenAI Help Centermitsloanedtech.mit.edu

- Shareable links. You can create shared links to a conversation for quick collaboration (handy when passing a structured summary to teammates). OpenAI Help Center

Use it when: you need guaranteed JSON from the API and quick CSV/figure exports from an analysis run—without leaving the chat. OpenAI PlatformOpenAI Help Center

Gemini (Google)

- Schema-true JSON (API). Gemini supports structured output via a

responseSchema(AI Studio/Google AI for Devs) and Vertex AI (“response schema”)—useful when you need strict, machine-readable results. Google AI for DevelopersGoogle Cloud+1 - One-click Workspace exports. From Gemini Apps you can Export to Docs/Gmail, and for tables you can Export to Sheets. Deep Research reports also include Export to Docs from the Canvas panel. Google Help+2Google Help+2

Use it when: your workflow revolves around Google Docs/Sheets and you want auditable, exportable research reports with minimal friction. Google Help+1

Claude (Anthropic)

- Schema control (API). Claude can be guided to emit JSON that follows a schema by using tools/tool use—a common pattern for reliable structured output. Anthropic’s docs also cover “JSON mode” style prompts to increase output consistency. Anthropic+2Anthropic+2

- Artifacts & sharing. Artifacts present substantial, standalone outputs (documents, code, data apps) in a dedicated pane you can share or build on; org admins can also export org data from settings. Anthropic Help Center+1anthropic.com

Use it when: you want conservative, citation-forward summaries plus programmatic JSON for pipelines—especially if you’ll package results as Artifacts. Anthropic

Practical formats to request (works in all three)

- JSON with schema: “Return results in JSON; keys:

study,year,method,n,effect_size(float),doi(string).” (For APIs, attach a JSON Schema.) OpenAI PlatformGoogle AI for Developers - CSV export: “Output the extracted table as a downloadable CSV and include a quick data dictionary.” OpenAI Help Center

- Docs/Sheets handoff (Gemini): “Format as a table, then Export to Docs / Export to Sheets.” Google Help+1

Quick verdict (structured outputs & exports)

- Most robust JSON guarantees (API): ChatGPT and Gemini with JSON Schema/response schema; Claude achieves schema-true JSON reliably via tool use. OpenAI PlatformGoogle AI for DevelopersAnthropic

- Smoothest document handoff: Gemini, thanks to built-in Export to Docs/Sheets and Deep Research Export to Docs. Google Help+1

- Researcher-friendly CSV/plot exports from a chat: ChatGPT Data Analysis (download files + visible code). OpenAI Help Center

Integrations & Ecosystem

ChatGPT (OpenAI)

- Connectors (built-in): Official connectors let ChatGPT pull from third-party apps (e.g., Google Drive, GitHub, SharePoint) inside a chat. In August 2025, OpenAI added Gmail, Google Calendar, and Google Contacts connectors for Plus/Pro users. OpenAI Help Center+1

- Cloud files in chat: You can add files directly from OneDrive/SharePoint via URL, making it easy to analyze documents without manual downloads. OpenAI Help Center

- Actions & function calling (for APIs): Build GPTs that call external REST APIs via GPT Actions, or wire up your own endpoints with function calling—useful for custom research pipelines. OpenAI Platform+1

- Agentic workflow: The new ChatGPT agent can navigate sites, work with files, and connect to third-party data sources to complete multi-step tasks. OpenAI Help Center

- Automation ecosystem: Broad first-party and Zapier support to trigger cross-app workflows. Zapier

Best if: You want a wide, fast-moving ecosystem (connectors + Actions + Zapier) that can reach email, calendars, cloud drives, and custom APIs—all from one chat. OpenAI Help CenterOpenAI Platform

Gemini (Google)

- Workspace-native: Gemini runs inside Gmail, Docs, Sheets, and Drive, with one-click Export to Docs/Sheets and side-panel assistance—great for turning research into shareable docs. Google HelpGoogle Workspace

- Deep Research + files: You can include your own files in Deep Research so the write-up cross-references uploads with the open web and returns linked sources. Google Help

- Data stack integrations: Gemini in BigQuery (SQL/Python assist) and Gemini in Colab Enterprise support notebook/warehouse-first analysis without moving data. Google Cloud+2Google Cloud+2

- Extensions (apps): Gemini Apps can pull from Google services like Maps, Flights, YouTube and more, when enabled. Google Help

Best if: Your team already lives in Google Workspace or BigQuery/Colab and you want the smoothest path from research → Docs/Sheets with minimal glue code. Google Help

Claude (Anthropic)

- Workspace connections: Claude now offers Google Workspace integrations to search Gmail, review Docs, and see Calendar context—handy for research briefs and literature management. anthropic.com

- Tool use / API ecosystem: Robust tool use lets Claude call your services (server/client tools), including web search and custom APIs, so you can stitch research workflows programmatically. Anthropic

- Artifacts (shareable outputs): Artifacts package substantial outputs (docs, code, mini-apps) you can share and iterate on—useful for publishing research summaries or data apps internally. anthropic.com+1

- Automation: Zapier supports Claude, so you can trigger downstream tasks (e.g., file-to-brief pipelines). Zapieranthropic.com

Best if: You want conservative, citation-forward research plus programmable tool use and shareable Artifacts for repeatable internal workflows. Anthropicanthropic.com

Quick verdict (integrations & ecosystem)

- ChatGPT: Broadest connector mix + Actions + agent—strong for heterogeneous stacks and custom API workflows. OpenAI Help CenterOpenAI Platform

- Gemini: Easiest Workspace-native path from insights to Docs/Sheets; strong data-side hooks (BigQuery, Colab). Google HelpGoogle Cloud

- Claude: Clean Workspace tie-ins, robust tool use, and Artifacts for packaging research deliverables. anthropic.comAnthropic

Privacy, Security & Compliance

If you’re targeting “ChatGPT vs. Gemini vs. Claude: Which is the best research tool?”, readers will care about four things: (1) training use of their data, (2) certifications & legal addenda (DPA/BAA), (3) encryption & retention controls, (4) data residency/sovereignty. Here’s the current, vendor-official picture.

ChatGPT (OpenAI)

- Training defaults (business tiers): OpenAI says ChatGPT Team, Enterprise, and Edu data isn’t used to train models by default; you own your inputs/outputs. OpenAI+1

- Consumer controls: Individuals can opt out of training via Data Controls. OpenAI Help Center+1

- Certifications & security: SOC 2 Type 2; AES-256 at rest and TLS 1.2+ in transit. OpenAI+1

- Retention & ZDR: API data is typically retained up to 30 days for abuse monitoring; Zero Data Retention (ZDR) is available for eligible endpoints/customers. OpenAI

- Data residency: Options now include US, Europe, Japan, Canada, South Korea, Singapore, and India (relevant for regulated/geofenced workloads). OpenAI Help CenterOpenAI

- Compliance paperwork: DPA available; BAA available for qualifying healthcare/API use cases. OpenAI+1

- Third-party connectors caution: When using GPT Actions/connected APIs, your data may go to that third party—use only services you trust. OpenAI Help Center

Good fit when: you need broad compliance features (SOC 2, DPA/BAA), multi-region residency including India/EU, and fine-grained retention controls/ZDR for API workloads. OpenAI Help CenterOpenAI

Gemini (Google)

- Workspace data use: Google states your organization’s Workspace data is not used to train Gemini models or for ads, with DLP, IRM, and client-side encryption (CSE) controls. Google Workspace

- Certifications & HIPAA: Google highlights SOC 1/2/3, ISO 27001/27701 and support for HIPAA scenarios in Gemini for Workspace. Google Workspace+1

- Sovereignty tooling: Workspace offers controls for digital sovereignty (admin policies, regional controls) helpful to compliance teams. Google Workspace+1

- Consumer app privacy notes: Recent Personal Context and Temporary Chats features let you choose what Gemini remembers and what isn’t stored/used—useful for personal research privacy. The VergeAndroid Central

Good fit when: your org already runs on Google Workspace and you want native Docs/Sheets/Gmail integrations under enterprise terms that don’t train on your domain’s data. Google Workspace

Claude (Anthropic)

- Training defaults: Anthropic says it doesn’t use inputs/outputs from both consumer (Free/Pro/Max) and commercial products to train models—unless you explicitly opt in or report content. Anthropic Privacy Center+1

- Role & agreements: For commercial customers, Anthropic acts as a data processor; BAA is available for HIPAA-eligible API use (with feature limits like web search excluded under the BAA). Anthropic Help CenterAnthropic Privacy Center+1

- Certifications: SOC 2 Type II, ISO 27001, ISO 42001 (AI management), and others published via Trust Center. Anthropic Privacy CenterTrust Center

Good fit when: you want privacy-forward defaults, HIPAA-eligible API paths under a BAA, and a vendor that positions itself as a strict processor for enterprise use. Anthropic Privacy CenterAnthropic Help Center

Practical checklist (what to enable regardless of vendor)

- Turn off model training for work accounts (if not already off by default). OpenAIGoogle WorkspaceAnthropic Privacy Center

- Lock retention (short windows or ZDR on API where eligible). OpenAI

- Use regional storage/processing to meet residency rules (EU/India/Asia options exist). OpenAI Help Center

- Route sensitive workloads through first-party, compliant paths (e.g., Workspace Gemini, ChatGPT Enterprise/API with ZDR, Claude API with BAA). Google WorkspaceOpenAIAnthropic Privacy Center

- Review third-party tool permissions (connectors, Actions, extensions). OpenAI Help Center

Verdict for this category

- Most “Workspace-native” compliance out-of-the-box: Gemini (no training on domain data, CSE/DLP/IRM, broad certifications). Google Workspace+1

- Broader residency/ZDR knobs across stacks: ChatGPT (Team/Enterprise/Edu no-training, SOC 2, multi-region data residency, API ZDR). OpenAI+1OpenAI Help Center

- Privacy-forward defaults with HIPAA-eligible API: Claude (no training by default; BAA for qualifying use; strong certs). Anthropic Privacy Center+1

That’s the compliance angle behind “ChatGPT vs. Gemini vs. Claude: Which is the best research tool?”—choose based on your stack, regulatory scope, and residency needs.

Speed, Reliability & Rate Limits

For the query “ChatGPT vs. Gemini vs. Claude: Which is the best research tool?”, engineers and writers mainly care about three things: (1) how fast the first token arrives (perceived speed), (2) platform reliability/uptime, (3) the practical ceilings set by rate limits.

ChatGPT (OpenAI)

Speed

- The Responses API supports streaming, so you can render text as it’s generated (fast first token; lower perceived latency). OpenAI Platform+1

Reliability

- OpenAI publishes a public status page. Over May–Aug 2025 the dashboard shows ~99.66% API uptime and ~99.43% ChatGPT uptime, with incident history you can audit. OpenAI Status+1

Rate limits

- API limits are tiered by org and model and measured in RPM / TPM / RPD (requests & tokens). See the official Rate limits guide; Azure OpenAI documents the same RPM/TPM model on Azure. OpenAI PlatformMicrosoft Learn

- In the ChatGPT app, message caps exist per model; for example, current help content lists a GPT-5 cap of ~160 messages per 3 hours for Plus (subject to change). OpenAI Help Center

What it means in practice: use streaming for responsiveness, and design your app for graceful backoff when RPM/TPM thresholds are hit.

Gemini (Google)

Speed

- Google offers a Live API for low-latency, real-time interactions (voice/video/text), designed to minimize round-trip delays for interactive experiences. Google AI for Developers

- Gemini supports streaming in its developer stack (SSE-style delivery) via Google’s tooling; see rate-limits docs for usage patterns. Google AI for Developers

Reliability

- Google exposes service health via the Google Cloud Status Dashboard (Workspace/Vertex services are tracked there). A dedicated Gemini-only uptime page is not separately listed; most teams monitor Google Cloud’s board and issue trackers. status.cloud.google.comGoogle AI Developers Forum

Rate limits

- Gemini API documents limits by RPM / TPM / RPD and evaluates usage per project. Vertex AI additionally documents quota ceilings (e.g., token-per-request caps and embedding limits). Google AI for DevelopersGoogle Cloud

What it means in practice: If you want snappy, live interactions (e.g., research assistants that “talk back”), Gemini’s Live API is purpose-built; for large-scale jobs use Vertex quotas and watch per-project rate limits. Google AI for Developers

Claude (Anthropic)

Speed

- The Messages API supports SSE streaming; you can set

stream: trueto start receiving tokens immediately. Anthropic+1

Reliability

- Anthropic publishes uptime for claude.ai and api.anthropic.com. Recent 90-day windows show ~99–100% service availability; monthly uptime is listed on a public page. status.anthropic.com+1

Rate limits

- Limits are transparent and tiered by model. Example (Messages API, per org tier): Claude Opus 4.x / Sonnet 4 can reach hundreds of thousands to millions of tokens/minute at higher tiers (e.g., ITPM 450k → 2,000,000; OTPM 90k → 400k; RPM 1,000 → 4,000). Exceeding limits returns HTTP 429 with a

retry-after. Anthropic

What it means in practice: Claude is easy to plan capacity for because Anthropic publishes concrete token ceilings and 429 retry guidance. Anthropic

Practical tips to actually go fast (works for all three)

- Stream everything (UI shows text as it arrives). OpenAI PlatformAnthropic

- Chunk & cache: split long tasks; cache repeated summaries to save tokens and time.

- Use backoff/retries on 429 or transient errors (respect

retry-after). Anthropic - Prefer shorter outputs with structured formats (tables/JSON) to cut generation time.

- Monitor vendor status and degrade gracefully (e.g., switch models or fall back to cached results). OpenAI Statusstatus.cloud.google.comstatus.anthropic.com

Verdict for this category

- Fastest interactive feel: Gemini’s Live API is tailored for low-latency, real-time experiences. Google AI for Developers

- Best for published, high token-throughput tiers: Claude (clear, generous token/minute ceilings at higher tiers). Anthropic

- Strong overall reliability + mature streaming & tooling: ChatGPT/OpenAI (public uptime metrics, streaming, and widely adopted SDKs). OpenAI StatusOpenAI Platform

That’s the speed/limits lens on “ChatGPT vs. Gemini vs. Claude: Which is the best research tool?”—pick based on whether you need snappy live UX, bulk token throughput, or broad stability + ecosystem.

Cost & Value for Money

If someone is Googling “ChatGPT vs. Gemini vs. Claude: Which is the best research tool?”, they’re usually weighing monthly price vs. what you actually get (limits, features, and extras like storage or integrations). Here’s a crisp, current view.

Snapshot — individual plans

- ChatGPT Plus — $20/month. Straightforward upgrade for higher limits and premium features. OpenAI

- Google AI Pro (Gemini) — $19.99/month. Includes Gemini 2.5 Pro access, Deep Research, and 2 TB Google One storage; also unlocks Gemini in Gmail/Docs (in supported languages). Gemini

- Claude Pro — $20/month or ~$17/month if billed annually ($200 up-front). Good value if you prefer annual billing. anthropic.com

Value tip (solo): Price is basically a tie at ~$20/mo. Gemini’s bundle (2 TB storage + Workspace tie-ins) is a strong sweetener; Claude can be a hair cheaper on annual. Geminianthropic.com

Teams & orgs

- ChatGPT Team — $25/user/mo (annual) or $30/user/mo (monthly). Predictable per-seat pricing with a shared workspace. OpenAI Help CenterOpenAI

- Gemini in Workspace — AI now “baked in” to Workspace plans (no separate add-on); net uplift depends on SKU. Google’s 2025 change simplified pricing by including Gemini features in core plans (example uplift was +$2/user/mo in Google’s announcement). Exact per-seat varies by Workspace tier and region. Google Workspace+1

- Claude Team — $30/user/mo (or $25 on annual). Team workspace with admin/billing. Enterprise is quote-based. anthropic.com

Value tip (teams): If you already pay for Google Workspace, the bundled Gemini route can be most economical. Otherwise ChatGPT Team is the clearest sticker price; Claude Team is close and often chosen for its conservative, citation-forward style. Google WorkspaceOpenAI Help Centeranthropic.com

Power tiers & enterprise

- ChatGPT Pro — $200/month. Meant for heavy users who want expanded access to the most compute-intensive models/features; API still billed separately. OpenAIOpenAI Help Center

- Google AI Ultra — $249.99/month. Highest access/limits across Gemini features (and 30 TB storage). Gemini

- Claude Max — from $100/month. Higher usage per session than Pro; Claude Enterprise is custom-priced. anthropic.com

Value tip (heavy users): These tiers only pay off if you genuinely hit limits often (deep research, long context, or heavy multimodal/video). Otherwise the ~$20 plans deliver most day-to-day value.

API pricing (separate from app subscriptions)

If you’ll automate literature reviews or build scripts, costs are pay-as-you-go:

- OpenAI API: model-based per-token pricing (o-series, GPT-4.1/4o, etc.). OpenAI Platform

- Gemini API: published per-million-token rates (2.5 Pro tiers) plus separate Google Search grounding charges. Google AI for Developers

- Anthropic API: per-model token pricing (Opus/Sonnet/Haiku) and add-ons like web search or code execution. anthropic.com

Value tip (builders): Use prompt caching/response schema where offered and batch long jobs to cut costs substantially. (All three provide caching/quotas in docs.) Google AI for Developersanthropic.com

Bottom-line verdict for “Cost & Value for Money”

- Best bundle for the price (solo): Gemini (Google AI Pro) — same sticker as ChatGPT Plus but throws in 2 TB storage and tight Workspace integration. Gemini

- Most predictable per-seat for non-Google shops (teams): ChatGPT Team at $25–$30/user/mo. OpenAI Help Center

- Cheapest annual path (solo) + conservative style: Claude Pro at ~$17/mo when billed annually; Team matches ChatGPT’s ballpark. anthropic.com

- Power users: Step up only if you constantly hit limits—ChatGPT Pro ($200) / AI Ultra ($249.99) / Claude Max (from $100) are premium and situational. OpenAIGeminianthropic.com